Solving the Challenge of Evolving Data Schemas with Agentic AI

Managing data pipelines can be complex, especially when dealing with data coming from upstream applications that constantly evolve. A significant challenge arises when these applications send unstructured data or modify the structure of their messages – a process known as schema evolution. Traditionally, handling this involves significant manual effort and coordination.

The Traditional Approach vs. the Problem

Typically, data flows from applications sending messages through systems like Kafka, which are then consumed and stored, perhaps on platforms like Delta Lake or Iceberg. Downstream consumers, such as other micro-services, applications, agents, or analytical tools, rely on a schema registry to ensure the message shape is consistent. In a traditional setup, when an upstream application changes its message structure (i.e., the schema evolves), it requires a collaborative effort between the product manager, data engineer, and upstream developer. They must agree on the new schema, update the schema registry, and ensure the ETL pipeline and consumers can handle the changes before the new data fields become available for analytics.

However, this manual process struggles to keep pace with frequent changes and the introduction of unstructured data. The video demonstrates this by showing a producer simulating different versions of an application sending events with evolving message shapes. Initially, when version 1.1 introduces a new field like "phone," the data arrives, but because the schema registry hasn't been updated manually, the new field isn't recognized or available for querying.

Introducing Agentic AI to Automate Schema Evolution

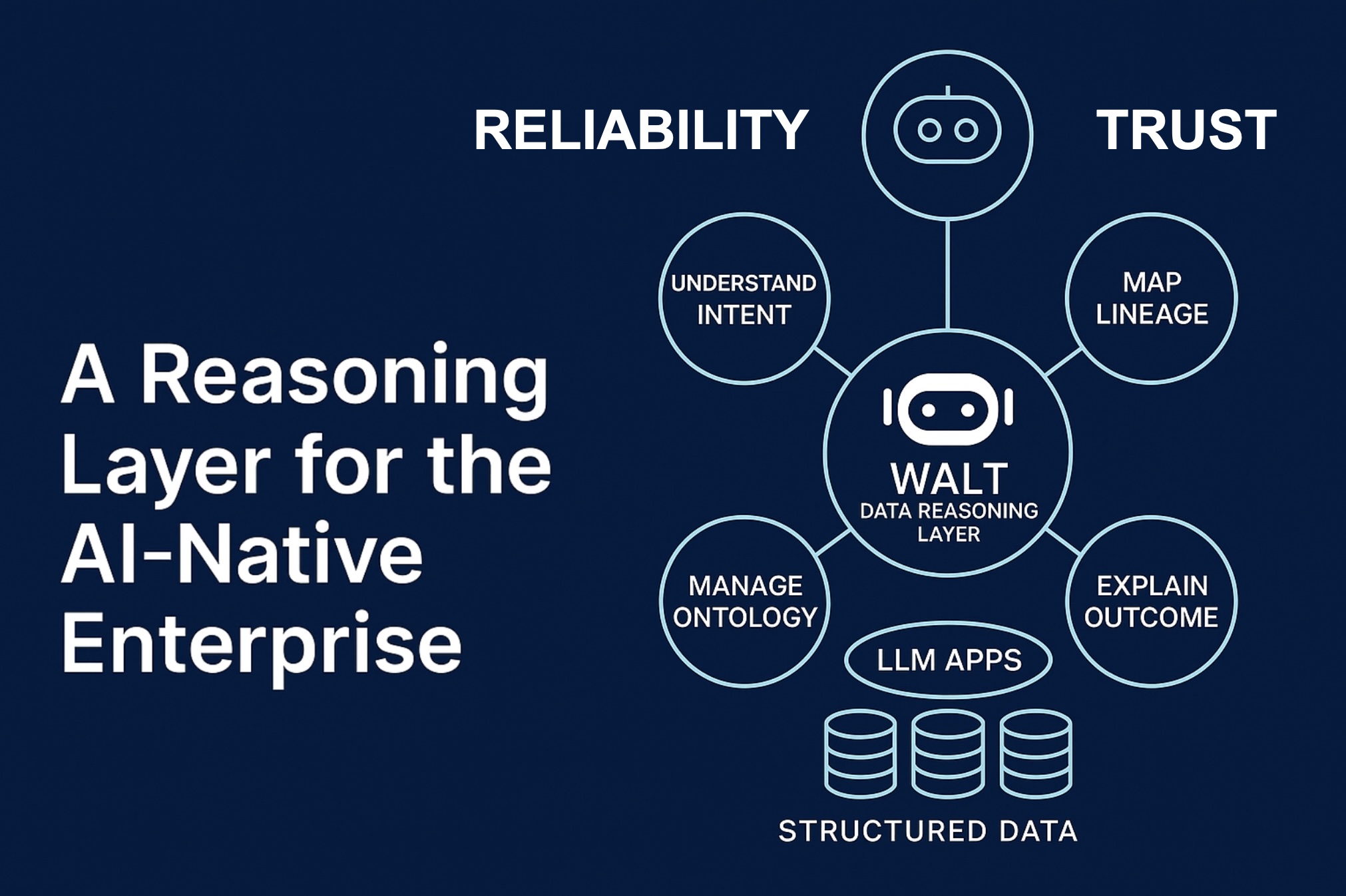

The video introduces a solution using agentic AI to automate this process. An agent, named Atlas (bundled with a system called Walt), is presented as a data engineering agent. This agent has the ability to reason and introspect the data schema.

Here's how we addresses the schema evolution challenge:

• Detection: We constantly works through different pieces of data. It reads the existing schema registry and compares it with the incoming messages. It detects when a schema evolution has occurred.

• Introspection and Reasoning: The agent doesn't just detect changes; it can understand them. The video explains that Atlas looks at different versions of the data, reads the schema registry, and can even read the source code from places like GitHub. By combining the message shape information with the source code, Atlas can triangulate the purpose of the new fields. For instance, when the "phone" field was introduced in version 1.1, Atlas detected it, figured out it was a new field, and even described it as the "applicant's phone number".

• Automated Schema Update: Once Atlas understands the new schema and the purpose of the fields, it automatically updates the schema registry. The demonstration shows the agent adding the new fields it detected to the schema registry and even performing QA on the changes.

• Data Availability: With the schema registry updated, the new data fields immediately become available for querying and analytics without any human involvement. The example shows that after Atlas updated the schema, asking about the onboarding funnel again now included the newly added "phone" field.

The demonstration is further extended by showing Atlas handling multiple version releases (1.1, 1.2, 1.3) simultaneously. Even when message shapes become significantly more complex, potentially including large unstructured data like PDFs within a field, Atlas can detect the changes, reason about them (using its ability to read code), and automatically update the schema registry to make the entire funnel available.

In essence, data agents like Atlas can automate the tedious and error-prone process of managing schema evolution from upstream data producers, allowing new data fields to be leveraged for real-time intelligence and analytics much faster and without requiring manual intervention from product managers, data engineers, or developers for every schema change.