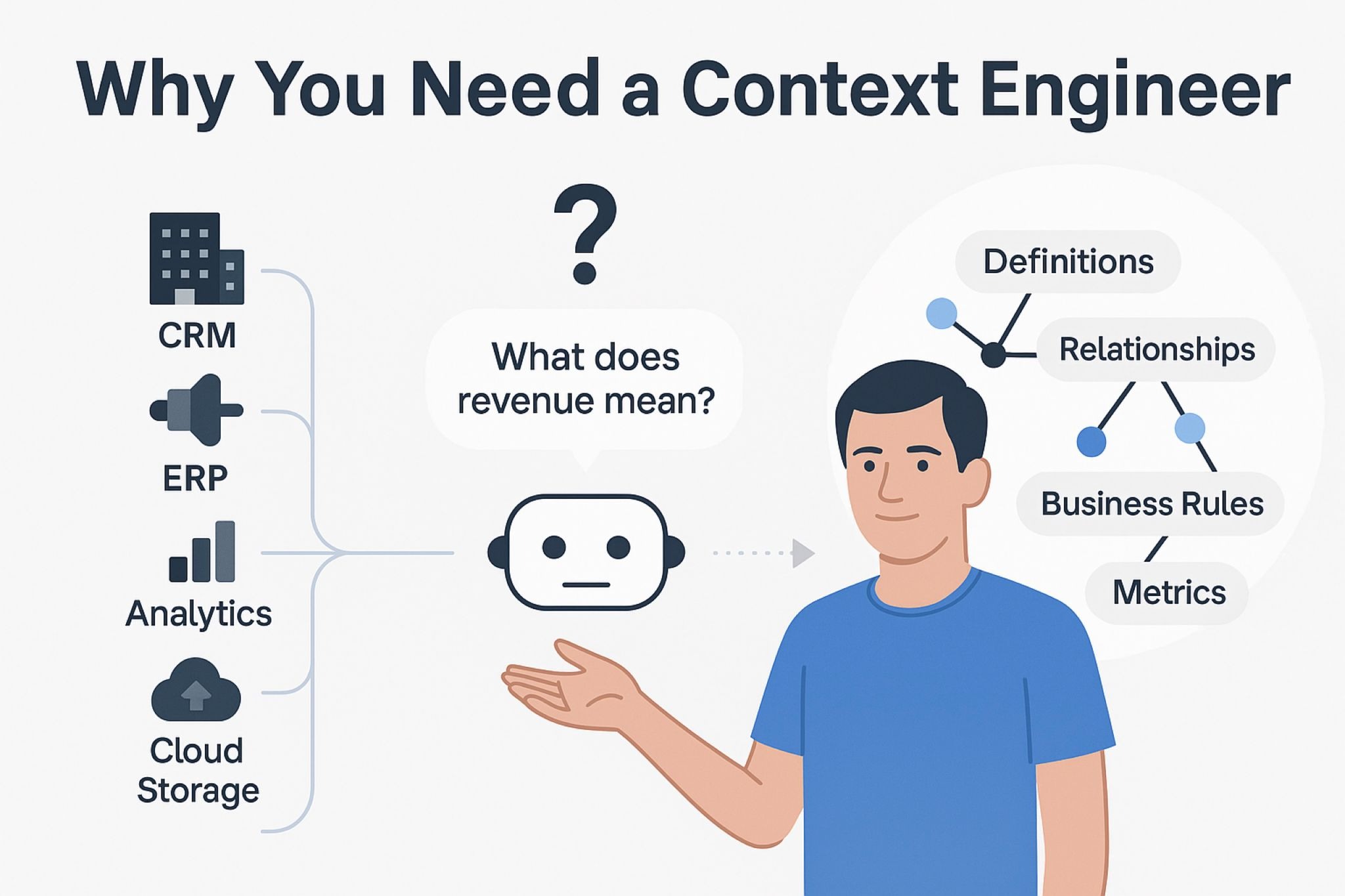

In the rush to adopt AI, it was tempting to believe that hooking an LLM into your data stack was all you needed. But as many data leaders have discovered: AI doesn’t magically understand your business, your metrics, or your domain. The missing piece is context.

Ah, so the obvious answer is to hire a context engineer, right?

A context engineer is a specialist whose job it is to build, maintain, and refine the semantic and logical scaffolding that makes structured enterprise data intelligible and reliable to AI agents. They translate business vocabulary, domain rules, data lineage, transformations, and metric definitions into a form that an AI system can use consistently. In short: they imbue raw data with meaning, so the AI doesn’t hallucinate or misinterpret.

Here’s the value a context engineer can provide:

- Vocabulary & semantics alignment: Different teams may use the same term (e.g. “visibility”) with completely different meaning. The context engineer maps all these business-specific definitions so that when an AI sees “visibility,” it knows the correct meaning in each context.

- Data canonicalization & lineage tracing: Modern data systems often proliferate multiple versions of the “same” metric (e.g. revenue, revenue_final, revenue_corrected). The context engineer inspects data, models relationships, and builds lineage graphs to understand which source is authoritative and how derived metrics map to base tables.

- Metric / KPI harvesting & curation: If your organization already has dashboards, governance tools, or data catalogs, the context engineer ingests those definitions and aligns them. If not, they establish baseline KPI definitions that can be approved, refined, and made available to the AI layer.

- Logical consistency & QA enforcement: They implement quality guards, consistency checks, and canonical logic so that the same query yields the same answer over time. That discipline is crucial when you’re moving AI-infused workflows into production.

In short, a context engineer ensures that a natural-language query like “What’s driving our churn this quarter?” doesn’t devolve into a hallucinated narrative by the AI, but returns a factually grounded, business-aligned analysis. Problem solved, right?

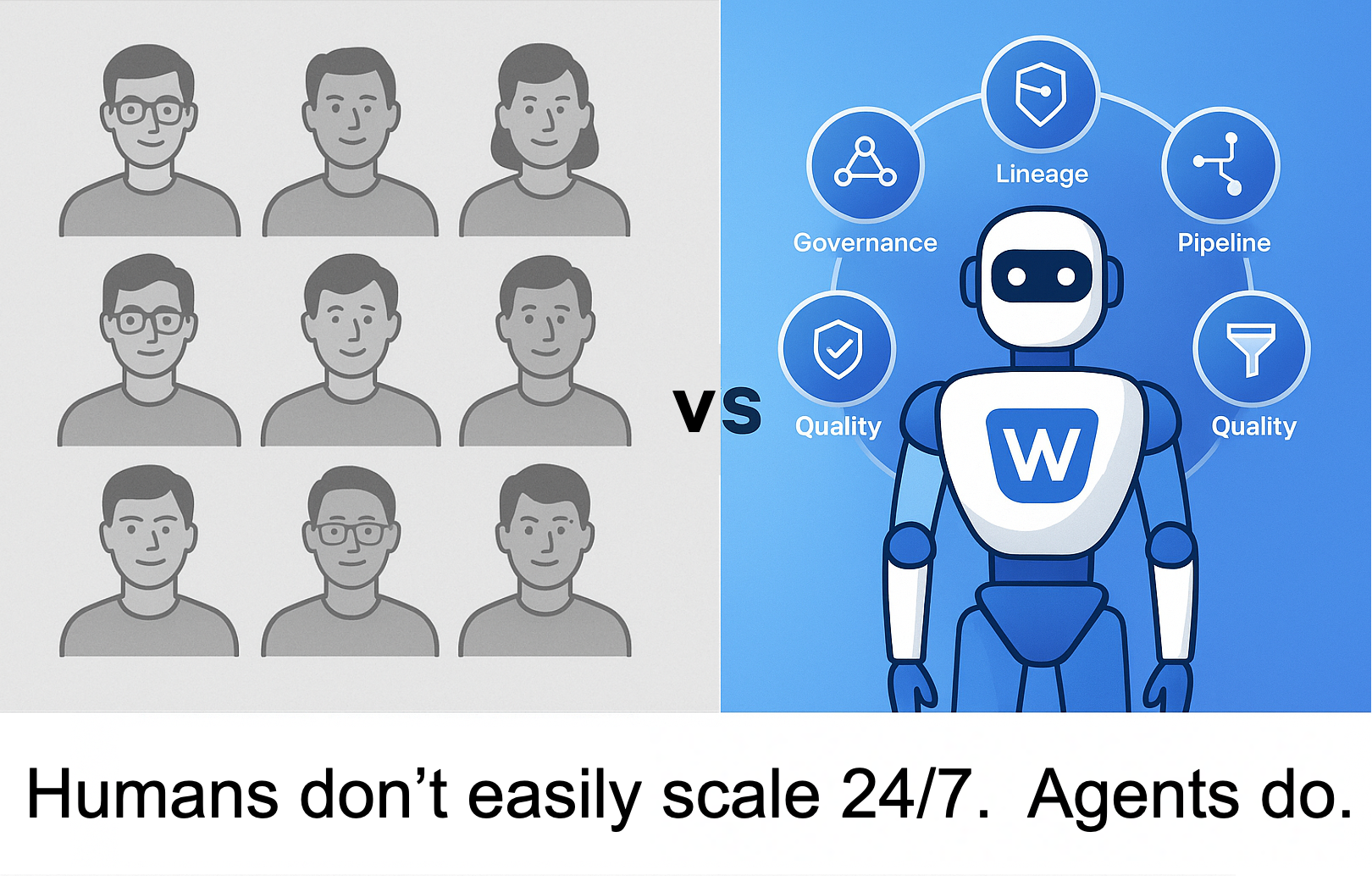

The Scalability Problem: You Probably Need Many Context Engineers

Here's the issue: data is never static. New data sources get onboarded, shemas evolve, business definitions shift, edge-case exceptions emerge, and governance rules change. Every time any of that happens, your AI reasoning infrastructure's context layer needs updating of fixing. That means, in a mature AI + data environment, a single context engineer (or even a small team) may struggle to keep up. You’ll want people on call 24/7 to:

- Monitor when AI agents’ answers drift or break.

- Adjust or extend the semantic mappings and lineage graphs.

- Remediate newly surfaced inconsistencies or conflicts.

- Onboard new data sources or business domains into the context fabric.

In effect, your context-engineering function becomes a perpetual, live operation. The scaling costs (headcount, latency, coordination overhead) can be significant. The more your organization’s data footprint and complexity grow, the more context engineers you might need. And coordinating across teams and ensuring coherence across definitions becomes increasingly painful at scale.

So while context engineers are essential, relying solely on human-driven context engineering is a brittle model. You’d prefer something that scales with your data growth, something that adapts automatically.

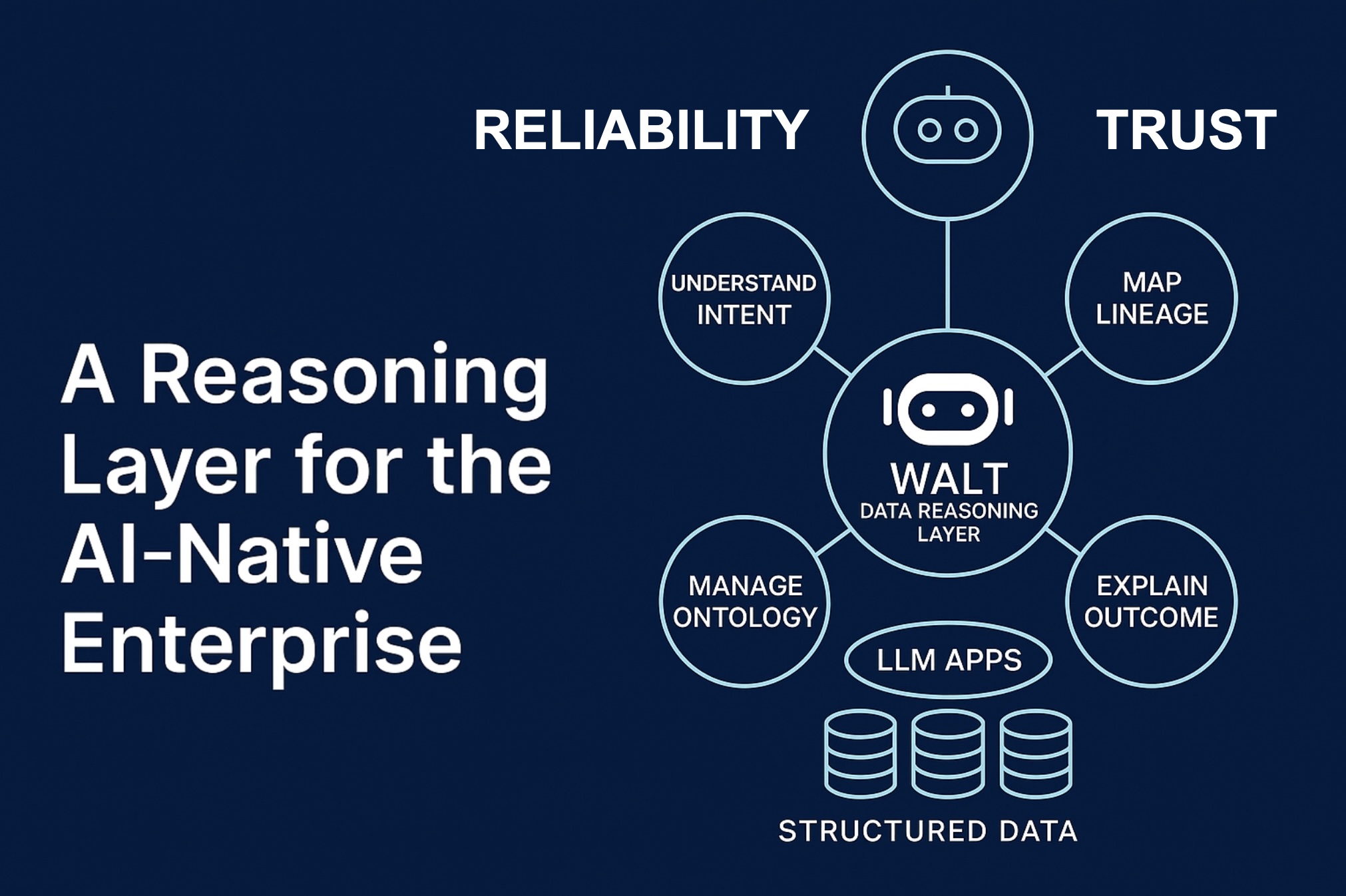

The WALT Alternative: Agentic AI

WALT proposes a smarter solution: agentic AI agents that do the heaving lifting, autonomously or semi-autonomously, to buld and maintain the context layer. WALT's agents build a ReasonBase™, a living, adaptive reasoning layer, on top of your structured data. Instead of hiring an army of context engineers, WALT is your context engineering team, powered by a network of agentic AI agents that continuously reason over your structured data.

Imagine replacing an army of full-time context engineers with a self-evolving data reasoning solution that:

- Monitors data consistency 24/7.

- Flags anomalies and suggests corrections automatically.

- Learns the semantic meaning of every data asset.

- Guarantees consistent, reproducible answers from your AI systems.

In essence, WALT will build your ReasonBase™, a living, adaptive data reasoning layer that learns, evolves, and maintains semantic consistency across your entire data estate.

Here’s how WALT mitigates the need for a battalion of human context engineers:

1. Automated introspection & relationship discovery

WALT continuously inspects your enterprise data environment — data warehouses, lineage tools, catalogs, dashboards — to discover relationships, dependencies, and transformations. It builds a vocabulary map and canonical model that evolves over time.

2. Continuous canonicalization & reasoning

Rather than relying on ad-hoc heuristic fixes, WALT canonicalizes business concepts (e.g. metric definitions, KPI forms) so AI agents or users querying the system always refer to the “correct” canonical version. That reduces the proliferation of divergent metric copies.

3. Agentic KPI harvesting and human-in-the-loop curation

WALT doesn’t force you to start from scratch. It can harvest KPI definitions from your existing BI systems, governance tools, or dashboards. And where ambiguity arises, it surfaces definitions for data stewards or domain experts to approve. Thus, the human input is directed, high-leverage, and fast.

4. Stable logical models (not free-wheeling LLM prompts)

A perennial problem: letting LLMs generate SQL or logic ad-hoc leads to nondeterminism; the same query could produce subtly different results over time. WALT avoids this by using “Stable Logic Models,” meaning identical questions lead to consistent answers every time, with continuous evaluation and refining.

5. Evaluation-driven reinforcement & self-correction

A big differentiator: WALT continually evaluates the answers it gives, flags discrepancies or drift, and strengthens its ReasonBase accordingly overnight. This kind of feedback loop ensures the context layer evolves in step with your data and business changes, rather than constantly lagging behind.

Because of these capabilities, WALT reduces your reliance on scaling a large team of human context engineers. Instead, you have an autonomous, agentic system that behaves like a context engineering team, continuously running, adapting, validating.

In Summary

A context engineer is critical to transform raw structured data into a meaning-rich foundation that AI systems can reliably use. But expecting a human-only team to manage that layer 24/7 at scale leads to a system that is brittle, expensive, and subject to lag.

WALT’s agentic AI represents a compelling alternative: our agents continuously introspect, canonicalize, harvest KPIs, validate their own outputs, detect drift, and evolve the reasoning layer, effectively acting as a context-engineering team that never sleeps. The result is a dynamic, scalable, living context layer (the ReasonBase) that lets you deploy AI over your enterprise structured data with confidence, consistency, and significantly lower overhead.