Is data a bottleneck to accelerating AI adoption at your company? You are not alone. Every major enterprise has made massive investments in data lakes, warehouses, and visualization layers. Yet when it comes to asking meaningful business questions across fragmented systems with inconsistent KPIs and multi-dialect SQL environment, the results are still slow, unreliable, or worse, untrustworthy.

WALT AI was built to fix this. Designed by engineers who’ve lived the complexity of enterprise data firsthand, WALT bridges the gap between large language models (LLMs) and enterprise database systems, without letting stochastic AI models run wild inside your .

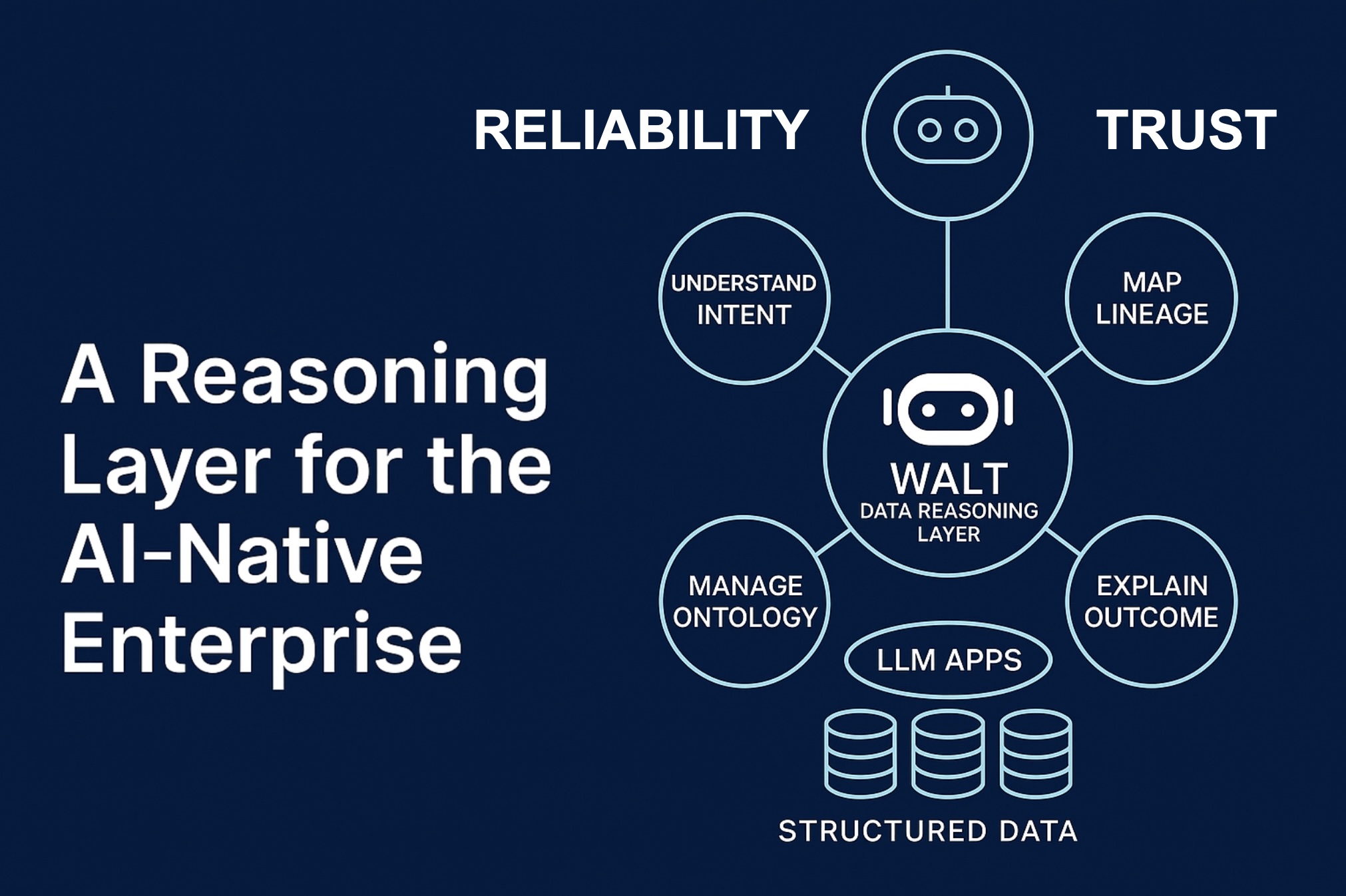

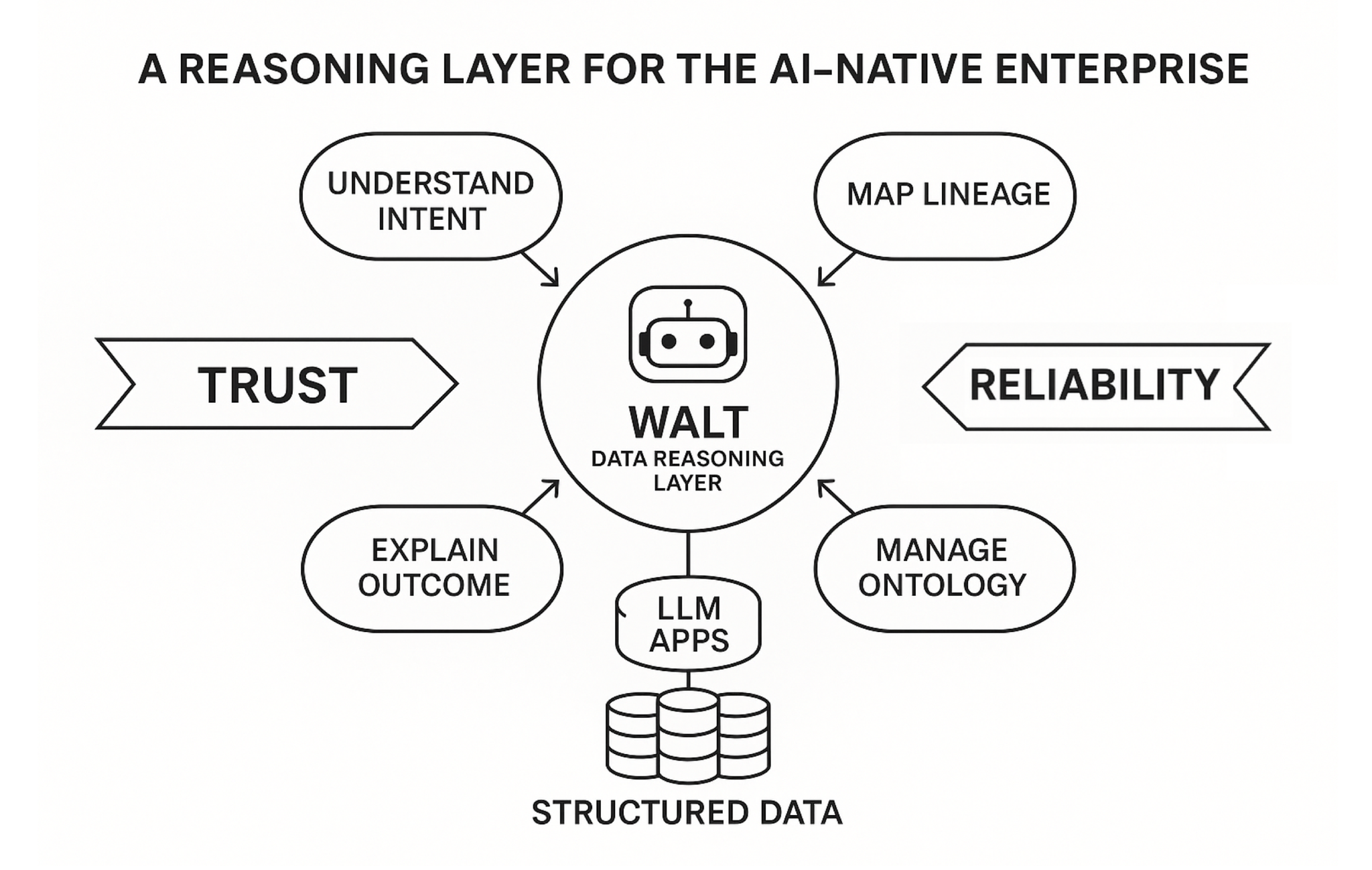

This is not another chatbot bolted onto your warehouse. It’s a data reasoning layer, a platform that interprets human intent, translates it into precise, reproducible queries across 20+ SQL dialects, and returns grounded, explainable answers you can trust.

The Vision: Interpreting Intent Without Letting LLMs Touch Your Data

Most AI assistants for analytics rely on LLMs to directly generate SQL. That approach might look elegant in a demo but in production as you may already have discovered, it can lead to chaos. LLMs hallucinate joins, miss filters, and generate queries that fan out and overload clusters.

WALT takes a radically different approach. Its proprietary intent compiler decodes the user’s question and translates it through a semantic layer and knowledge graph, not raw prompt-to-SQL generation. The result is deterministic, efficient code that executes the same way every time: no surprises, no broken dashboards, no data drift.

Our agents handle translation across 20 SQL dialects, ensuring consistent logic whether you’re running on Snowflake, Databricks, MySQL, Trino, or Postgres. The outcome is enterprise-grade reliability: every answer is traceable, every KPI grounded, every transformation auditable.

Data Where It Lives: No Movement, No Duplication, No Risk

Unlike other tools that copy or move data, WALT queries data in place. It connects directly to live systems (all major databases and data lakes) and executes queries where the data already resides. That means no latency from replication, no loss of governance control, and no new performance risk to your production systems.

This architecture supports both batch and near-real-time contexts. For streaming environments, WALT integrates with Kafka-backed persistence layers, enabling analytics and AI workflows that are current to the latest ingested event without compromising stability.

For large organizations already operating under tight compliance mandates, this architecture preserves data sovereignty while delivering modern AI-driven insights.

The Knowledge Graph: Turning Fragmented Metadata into a Living System of Record

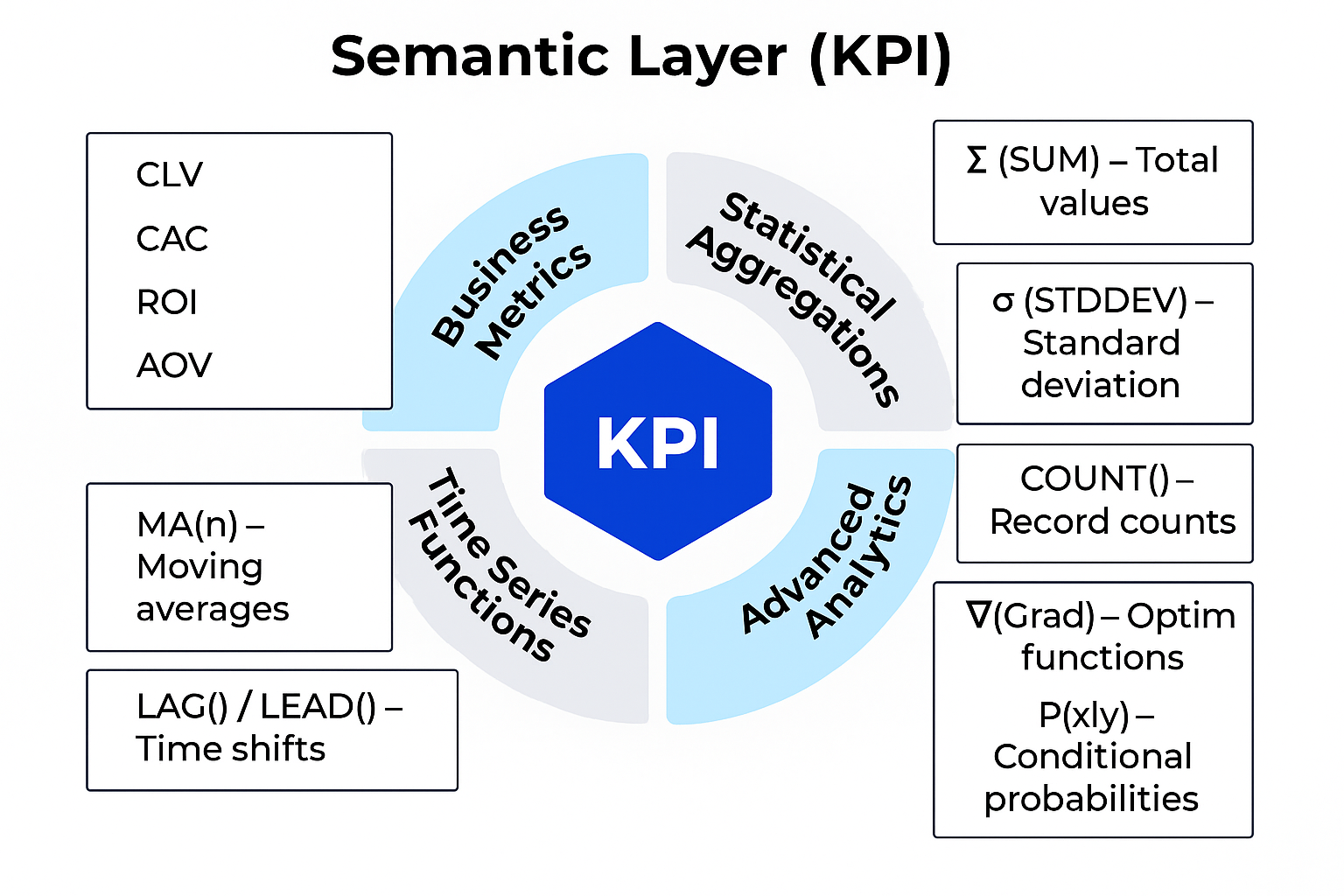

Every big company struggles with semantics. “Revenue” means one thing to finance, another to marketing, and something else to operations. Those differences derail AI readiness, because LLMs and analytics models cannot reason without shared meaning.

WALT solves this with a dynamic knowledge graph: a continuously self-learning map of your enterprise data and relationships. It introspects across DBT models, query logs, Git repositories, and dashboard specs (from Tableau, Power BI, Superset, and many others). It reconstructs lineage, detects relationships (including many-to-many bridge tables), and aligns your data sources to a common ontology that defines metrics and KPIs the way your business actually uses them.

WALT continuously re-evaluates new incoming queries, updating its graph to reflect evolving logic and schema changes. That means your organization never drifts out of semantic sync. Your AI is always aligned with your business reality.

Deployment Built for the Enterprise: Secure, Contained, and Fully Controlled

CIOs, CISOs and other security architects will appreciate that WALT’s entire platform ships as a single Docker image containing both frontend and backend components. It can be deployed in your VPC or on-prem, using Helm charts for full infrastructure control.

Metadata and graph data live in a Postgres instance that you own and manage. All LLM interactions (via OpenAI, Anthropic, or Google APIs) are configured with zero-retention guarantees, and customers can bring their own API keys to comply with internal security policies.

No GPUs are required locally, since all model inference calls are externalized, allowing seamless updates when new LLMs are released. It’s the best of both worlds: the agility of SaaS with the security posture of on-prem deployment.

Continuous Intelligence: A System That Self-Improves Nightly

Traditional analytics pipelines degrade over time. Schemas change, definitions drift, dashboards break, and someone in data engineering spends weekends chasing null values. WALT eliminates that pain.

Each night, it performs self-reflection: analyzing logs, questions, and metadata to detect inconsistencies, missing data, or ambiguous KPIs. It then proactively alerts data leaders, recommending fixes or enhancements to improve accuracy and completeness.

This constant self-correction cycle turns WALT into a living data reasoning system, one that gets smarter the more it’s used, and one that reduces manual maintenance across your entire analytics stack.

Real-World Proof: Serving a Billion$ Companies

WALT’s vision isn’t theoretical—it’s battle-tested. The platform is already deployed inside a $3 billion global marketing agency holding company, serving brands such as Airbus, Bayer and, Stellantis, and a $9 billion outdoor apparel company with multiple iconic brands.

These organizations face enormous complexity: billions of rows across multiple systems, dozen of definitions or "comversion," or "customer," and constant schema churn. WALT helps them unity that chaos, enabling analysts, data scientists, and executives to ask complex questions and receive grounded, verifiable answers in seconds. Instead of hiring armies of “context engineers” to reconcile definitions, these companies let WALT’s ReasonBase™ do the semantic heavy lifting, so humans can focus on strategy, not syntax.

Why It Matters: The Enterprise AI Gap

Every enterprise wants to be “AI-ready.” But most initiatives stall before they even start, because the foundation—the data layer—isn’t context-ready.

LLMs are powerful, but they’re only as smart as the definitions and relationships beneath them. If your systems don’t know how you define customer lifetime value, or whether “sales” include returns, your AI will make confident but wrong predictions.

WALT fixes this problem by introducing a reasoning layer between data and AI, a canonical source of truth that encodes semantics, lineage, and business rules so that every query, model, and agent operates from the same understanding.

A Platform for Builders: APIs, SDKs, and Integrations

For enterprises that prefer to build their own interfaces, WALT offers both a UI portal and an MCP API. Engineering teams can embed WALT’s reasoning capabilities directly into existing workflows, dashboards, or AI applications.

The company provides SDKs, detailed documentation, and deployment guides to help teams configure Vault environments, connect data sources, and integrate authentication and monitoring pipelines.

This flexibility allows organizations to use WALT as a turnkey portal for business users or as an embedded reasoning engine powering their internal tools.

The Future: A Reasoning Layer for the AI-Native Enterprise

WALT’s roadmap is built around one idea: the modern enterprise doesn’t just need a data warehouse or a semantic layer. It needs a data reasoning layer.

That layer must understand intent, map lineage, manage ontology, and explain outcome, all while staying secure, reproducible, and grounded in the organization’s real data.

As enterprises adopt agentic architectures and LLM-driven applications, the reasoning layer becomes the connective tissue that ensures reliability and trust. WALT is that layer, what we call the ReasonBase.

The Bottom Line

For CTOs, CDOs, and Chief Data Engineers at scale, WALT is not a toy. It’s a governed AI-native foundation for transforming how enterprises reason over data.

By combining secure deployment, deterministic query generation, continuous learning, and grounded explainability, WALT turns disconnected data assets into a living, self-maintaining intelligence system.

In an era when AI hype exceeds delivery, WALT delivers something rarer: truth at scale.